Activation Function in Neural Networks

An Artificial neural network is a computational model developed on the basis of biological neural network. It represents the working of Human brain. It includes a large number of connected processing units that work to process information. These processing units are known as "neurons".This model will help us to know the importance of 'activation function' in biological neural network. And finally we end up with the knowledge of actual use of activation function in artificial neural network.

In the above picture, the area between the two neurons where they communicate is known as synapse.The neuron receives signals from other neurons through dendrites. The weights (strength) associated with a dendrite,called synaptic weights , get multiplied by the incoming signal. The signals from the dendrites are accumulated in the cell body, and if the strength of the resulting signal is above a certain threshold, the neuron passes the message to the axon. Otherwise, the signal is killed by the neuron and is not propagated further.

Activation function are really important for a Artificial Neural Network to learn.Actually the activation function introduces non-linear properties to our artificial network.Their main purpose is to convert a input signal of a node in artificial neural network to an output signal. The output signal now is used as a input in the next layer of the artificial network.

3. Rectified Linear Unit ( ReLU ) :

Recently ReLU has gained a lot of popularity in the field of deep learning. Mathematically, it's very simple

This means a rectified linear unit has output 0 if the input is less than 0, and if the input is greater than 0 , the output is equal to the input. Hence as seeing the mathematical form of this activation function we can see that it is very simple and efficient. ReLU activation are the simplest non-linear activation function as we can see that there is no squeezing effect you meet on back propagated errors from the sigmoid function and there is simple thresholding.

Present research has shown that ReLU result in faster training for larger networks. Almost all deep learning models use ReLU nowadays as it rectifies the vanishing gradient problem.But the ReLU activation function has some limitations too.

1. It should only be used within the hidden layers of Neural Network Model.

2. Unfortunately,ReLU units can be fragile during training and can die.As during the forward pass if the input values is less than 0, then the neurons remains inactive and as we know that the gradient get multiplied with prior one so while back propagating the gradients die. The weights do net get updated and the neural network does not learn.

3. The outputs are not zero centered similar to the sigmoid function.

Note: The issues in ReLU activation function were further solved by the concept of Leaky ReLU and Parametric ReLU.

4. SoftMax Function :

The sigmoid function can be applied easily. the ReLUs will not vanish the effect during your training process. However ,when you want deal with classification problems,they cannot help much. Simply if we see the sigmoid function can only handle two classes,which is not what we expect.

Mathematically,

The Softmax function squashes the outputs between 0 and 1, just like a sigmoid function. But, it also divides each output such that the total sum of the outputs is equal to 1.The softmac function is equivalent to a categorical probability distribution ,it tells you the probability that any of the classes are true.

The softmax can be used for any number of classes. It's also used for hundreds and thousands of classes , for example in object recognition problems where there are hundreds of different possible objects.

5. Swish Activation Function :

According the research paper by Prajit Ramachandran , Barret Zoph, Quoc V. Le at Google Brain (https://arxiv.org/pdf/1710.05941.pdf) 'Swish' Activation function is better than ReLU activation function .

The experiments performed by researchers of Google Brain team state that Swish tends to work better than ReLU on deeper models across a number of challenging datasets. For,example for simply replacing ReLUs with Swish units improves top-1 classification accuracy on ImageNet by 0.9% for Mobile NASNet-A and 0.6% for Inception-Res-Net-v2.

Mathematically, Swish function can be represented as ,

f(x) = x · sigmoid(βx)

Like ReLU, Swish is unbounded above and bounded below. Unlike ReLU, Swish is smooth and non-monotonic. In fact, the non-monotonicity property of Swish distinguishes itself from most common activation functions.

The simplicity of Swish and its similarity to ReLU make it easy for practitioners to replace ReLUs with Swish units in any neural network.

A neuron has a physical structure that consists of a cell body, an axon that sends , messages to other neurons, and dendrites that receives signals or information from other neurons.

|

| Biological Neural Net |

The actual work of the activation function is to take the decision whether or not to pass the signal. In this case, it is a simple step function with a single parameter - the threshold.Now, when we learn something new ,the threshold and the synaptic weights of the neuron change. This creates new connections among neurons making the brain learn new thing.

But in case of artificial neural network,

|

| A simple artificial neuron |

Specifically in the above artificial network,  are the inputs and their respective weights

are the inputs and their respective weights  are multiplied. This is followed by accumulation ( summation +addition of bias ) . Finally, we apply a Activation function f(x) to it to to get the output of that layer and feed it as an input to the next layer.

are multiplied. This is followed by accumulation ( summation +addition of bias ) . Finally, we apply a Activation function f(x) to it to to get the output of that layer and feed it as an input to the next layer.

are the inputs and their respective weights

are the inputs and their respective weights  are multiplied. This is followed by accumulation ( summation +addition of bias ) . Finally, we apply a Activation function f(x) to it to to get the output of that layer and feed it as an input to the next layer.

are multiplied. This is followed by accumulation ( summation +addition of bias ) . Finally, we apply a Activation function f(x) to it to to get the output of that layer and feed it as an input to the next layer.What if 🤔 we do not use activation function ??

Without an activation function, our output signal would be a simple Linear function. The only purpose of the activation function is to introduce non-linearity into the network. A neural network would simply be a Linear Regression Model, which do not perform so well. Our purpose to build a Artificial neural network is to make it learn and compute some functionalities.Linear function can be computed using simple linear regression models but it create complexities in case of non-linear functionalities.So , we use the concept of activation function to get reed of more complex non linear data such as image,videos,audio etc.

Whenever we plot a Non linear function on graph we obtain a curvature.Now we need a neural network which can represent any function which can map inputs to the outputs. Neural-Networks are also known as Universal Function Approximators which means they can compute and learn any function .

So,we add an activation function f(x) to neural network so that it can compute and learn any complex form of data.These activation function transfers the input signals non-linearly.

Types of Activation function :

There are many type of activation function but we generally concentrate on some popular types of Activation function.

1 . Linear Activation Function

2 . Non linear Activation Function

We simply know that linear activation function transfers the inputs signals linearly which are used in Linear Regression Models.

This blog focuses on the use of activation function in neural networks which means it needs to be non linear. So, here we will only discuss about the variants of non linear activation function that can be used in the artificial neural network.

The variants of non linear activation function are :-

1. Sigmoid Function :

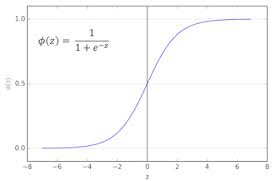

It is also known as Logistic Activation Function. It is a activation function of form

f(x) = 1 / 1 + exp (-x) . It takes the real valued number (input) and pushes it into a range between 0 and 1. It is preferred to be used in the output layer where our end goal is to predict probability. It converts all -ve numbers to 0 and all +ve numbers to 1. It is a S- shaped curve.

|

| Sigmoid Activation Function |

There are several drawbacks of sigmoid function which made it out of popularity. Here we will discuss about 3 major drawbacks of the above function.

(i) Vanishing Gradient : This problem occurs when we try to train a neural network model using Gradient based optimisation techniques. This problem degraded the accuracy of the model as well as increased the time of the training process.During Back-propagation for optimisation i.e, moving backward in the network and calculating the errors (the difference between the actual value and the predicted value) with respect to the weights , the gradients tend to get smaller and smaller as we keep on moving backward in the network . This means that the neurons in the earlier layers learn very slowly as compared to the neuron in the later layers in the network .This results in the slow training of the early layers.

Hence this is all Vanishing Gradient problem does to our Neural Network Model.

(ii) Not Zero Centered : Its output isn't zero centered. It makes the gradient updates go too far in different directions. 0 < output < 1 , makes the optimisation harder.

(iii) Sigmoids have slow convergence .

(iv) Computationally Expensive : The exponential function is computationally expensive compared with the other non-linear activation function.

2. Tanh Function :

It is also known as Hyperbolic tangent activation function. It is similar to sigmoid function, tanh also takes real valued number and pushes it into a range between -1 and 1. But the output of the tanh activation function are zero centered as the scope of the function lies between -1 and 1.

The -ve inputs are considered as strongly negative , the +ve inputs regarded as positive and the zero input values are mapped near zero. Somehow we can say that tanh function are preferred over sigmoid functions.

It's mathematical formula is f(x) = 1-exp(-2x) / 1+exp(-2x).

Drawback of tanh function :

(i) The tanh function also suffers from the vanishing gradient problem and therefore kills the gradient when saturated.

(iii) Sigmoids have slow convergence .

(iv) Computationally Expensive : The exponential function is computationally expensive compared with the other non-linear activation function.

2. Tanh Function :

It is also known as Hyperbolic tangent activation function. It is similar to sigmoid function, tanh also takes real valued number and pushes it into a range between -1 and 1. But the output of the tanh activation function are zero centered as the scope of the function lies between -1 and 1.

The -ve inputs are considered as strongly negative , the +ve inputs regarded as positive and the zero input values are mapped near zero. Somehow we can say that tanh function are preferred over sigmoid functions.

It's mathematical formula is f(x) = 1-exp(-2x) / 1+exp(-2x).

|

| Hyperbolic Tangent Function |

Drawback of tanh function :

(i) The tanh function also suffers from the vanishing gradient problem and therefore kills the gradient when saturated.

3. Rectified Linear Unit ( ReLU ) :

Recently ReLU has gained a lot of popularity in the field of deep learning. Mathematically, it's very simple

This means a rectified linear unit has output 0 if the input is less than 0, and if the input is greater than 0 , the output is equal to the input. Hence as seeing the mathematical form of this activation function we can see that it is very simple and efficient. ReLU activation are the simplest non-linear activation function as we can see that there is no squeezing effect you meet on back propagated errors from the sigmoid function and there is simple thresholding.

1. It should only be used within the hidden layers of Neural Network Model.

2. Unfortunately,ReLU units can be fragile during training and can die.As during the forward pass if the input values is less than 0, then the neurons remains inactive and as we know that the gradient get multiplied with prior one so while back propagating the gradients die. The weights do net get updated and the neural network does not learn.

3. The outputs are not zero centered similar to the sigmoid function.

Note: The issues in ReLU activation function were further solved by the concept of Leaky ReLU and Parametric ReLU.

4. SoftMax Function :

The sigmoid function can be applied easily. the ReLUs will not vanish the effect during your training process. However ,when you want deal with classification problems,they cannot help much. Simply if we see the sigmoid function can only handle two classes,which is not what we expect.

Mathematically,

The softmax can be used for any number of classes. It's also used for hundreds and thousands of classes , for example in object recognition problems where there are hundreds of different possible objects.

5. Swish Activation Function :

According the research paper by Prajit Ramachandran , Barret Zoph, Quoc V. Le at Google Brain (https://arxiv.org/pdf/1710.05941.pdf) 'Swish' Activation function is better than ReLU activation function .

The experiments performed by researchers of Google Brain team state that Swish tends to work better than ReLU on deeper models across a number of challenging datasets. For,example for simply replacing ReLUs with Swish units improves top-1 classification accuracy on ImageNet by 0.9% for Mobile NASNet-A and 0.6% for Inception-Res-Net-v2.

Mathematically, Swish function can be represented as ,

f(x) = x · sigmoid(βx)

Like ReLU, Swish is unbounded above and bounded below. Unlike ReLU, Swish is smooth and non-monotonic. In fact, the non-monotonicity property of Swish distinguishes itself from most common activation functions.

The simplicity of Swish and its similarity to ReLU make it easy for practitioners to replace ReLUs with Swish units in any neural network.

CONCLUSION :

Due its popularity ReLU is being used every where across the deep learning community. Thanks to its simplicity and effectiveness ,ReLU has become the default activation function for the developers.

With further research someday Swish may be able to replace ReLU.

Due its popularity ReLU is being used every where across the deep learning community. Thanks to its simplicity and effectiveness ,ReLU has become the default activation function for the developers.

With further research someday Swish may be able to replace ReLU.

Harrah's Gulf Coast Casino | Southern California - SEGA

ReplyDeleteHarrah's Gulf Coast Casino is California's premier gaming destination. Play slots, table septcasino games, and more at Harrah's Gulf Coast.